Simanaitis Says

On cars, old, new and future; science & technology; vintage airplanes, computer flight simulation of them; Sherlockiana; our English language; travel; and other stuff

RESISTING A.I. SLOP—AAAS SCIENCE’S VIEW

H. HOLDEN THORP DESCRIBES “Resisting A.I. Slop,” AAAS Science, January 1, 2026. Given that Thorp is Editor-in-Chief of all Science journals, he’s an excellent source of thoughtful information on this often hyped topic. Here are tidbits gleaned from his Editorial, together with a BBC view and my own A.I. counterstroke.

AAAS Science’s Guardrails. Dr. Thorp writes, “Science’s most recent policies allow the use of large language models for certain processes without any disclosure, such as editing the text in research papers to improve clarity and readability or assisting in the gathering of references. However, the use of AI beyond that—for example, in drafting manuscript text— must be declared. And the use of AI to create figures is not allowed. All authors must certify and be responsible for all content, including that generated with the aid of AI.”

He continues, “Science also uses AI tools, such as iThenticate and Proofig, to better identify text that has been plagiarized or figures that have been altered.”

What’s more, “Over the past year,” Thorp says, “Science has collaborated with DataSeer to evaluate adherence to its policy mandating the sharing of underlying data and code for all published research articles. The initial results are encouraging in that of 2680 Science papers published between 2021 and 2024, 69% shared data.”

That is, these researchers gave sufficient means of having others assess the reproducibility of claimed results; this, an important feature of legitimate science.

Catching Errors? Losing Jobs? Thorp assesses, “Although AI is helping Science catch errors that can be corrected or elements that are missing from a paper but should be included, such as supporting code or raw data, its use and the evaluation of the output require more human effort, not less.”

“Indeed,” Thorp continues, “AI is allowing Science to identify problems more rigorously than before, but the reports generated by such tools must be assessed by people. Perhaps the panic over AI assuming jobs will be justified in the long run, but I remain skeptical. Most technological advances have not led to catastrophic job losses.”

Online Courses? Online Journals? Thorp observes, “Higher education seemed under threat 15 years ago with predictions that massive open online courses were going to put universities out of business. That didn’t happen, but online courses did become an important element of education and allowed universities to grow, not shrink.”

“The movement of journals to publishing online,” he describes, “provoked a similar result—it increased the size and scale of scholarly publishing. Acceptance of bombastic statements about the impacts of A.I. on scientific literature should wait for verification.”

“Like many tools, Thorp concludes, “A.I. will allow the scientific community to do more if it picks the right ways to use it. The community needs to be careful and not be swept up by the hype surrounding every A.I. product.”

Hear! Hear!

BBC’s Identifying A.I. Slop. Amanda Ruggeri offers “The ‘Sift’ Strategy: A Four-Step Method For Spotting Misinformation,” BBC, May 10, 2024. She quotes Marcia McNutt, president of the U.S. National Academy of Science (and a predecessor of H. Holden Thorp as Science Editor-in-Chief 2013–2016): “Misinformation is worse than an epidemic. It spreads at the speed of light throughout the globe and can prove deadly when it reinforces misplaced personal bias against all trustworthy evidence.”

The “Sift” Method. “One of my favourites,” BBC’s Ruggeri describes, “comes with a nifty acronym: the Sift method. Pioneered by digital literacy expert Mike Caulfield, it breaks down into four easy-to-remember steps.”

S: Stop. Don’t just immediately share a post. Don’t comment on it. And move on to the next step here.

I: Investigate. Do an independent web search. Ruggeri suggests, “One that fact-checkers often use as a first port of call might surprise you: Wikipedia. While it’s not perfect, it has the benefit of being crowd-sourced, which means that its articles about specific well-known people or organisations often cover aspects like controversies and political biases.”

I concur.

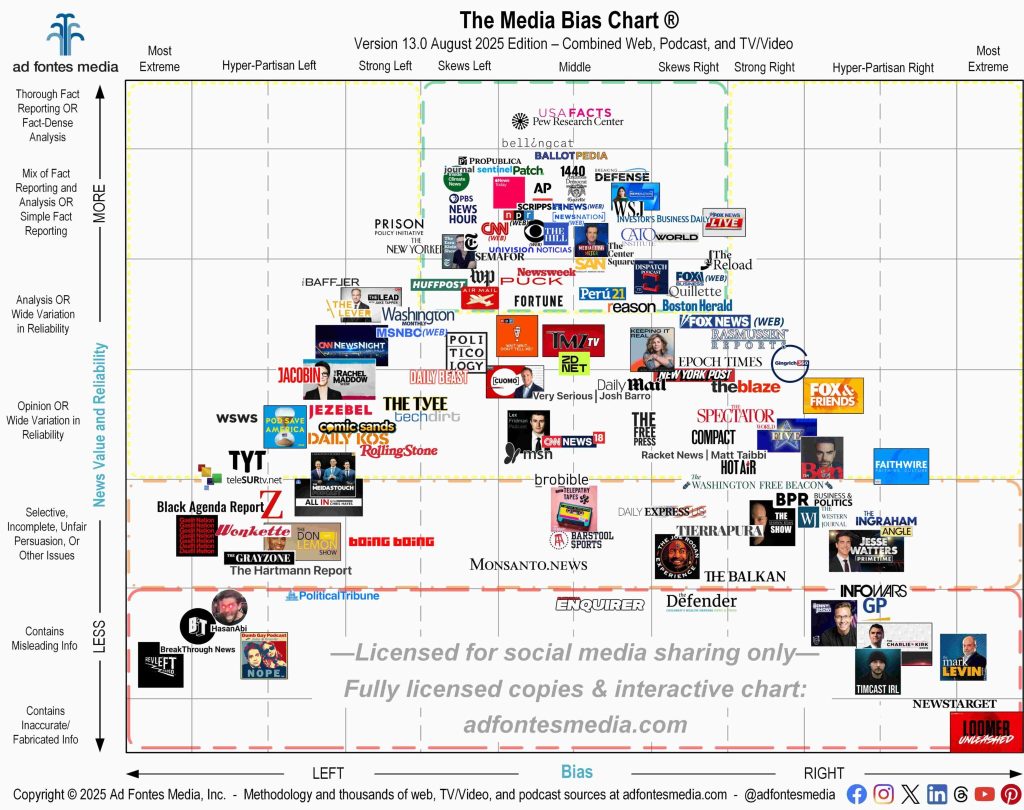

She also suggests checking the political leanings of sources. I’ve used allsides.com, adfontesmedia.com, and mediabiasfactcheck.com.

Image from adfontesmedia.com.

Also, the suffix .edu is useful (though, of course, you need to be aware of school creds as well).

F: Find Better Coverage. That is, digging a little deeper never hurts. As I’ve noted here, ain’t research fun!

T: Trace The Claim to Its Original Context. This, you’ll note, concurs with Thorp’s stressing the importance of identifying data and methodology.

My Added Identification of A.I. “Support” Slop. Increasingly many firms have A.I. robots as first response to phone contact. Recognizing this is my “A” word: “accommodating.” An overly polite attempt at accommodating a request, albeit with no real solution, is a clear giveway to robotic strategy.

Upon recognizing this, I respond by striving to reach a human. Try “0,” “*,” or “#,” perhaps multiple times. Just wait. Or, if all else fails, try “Cancel my account.” See wikiHow.com.

And good luck. ds

© Dennis Simanaitis, SimanaitisSays.com, 2026

Related

2 comments on “RESISTING A.I. SLOP—AAAS SCIENCE’S VIEW”

Leave a reply to simanaitissays Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed.

Information

This entry was posted on January 24, 2026 by simanaitissays in I Usta be an Editor Y'Know and tagged "adfontesmedia.com" spectrum of media, "Resisting A.I. Slop" H. Holden Thorp AAAS "Science", "SIFT": stop/investigate/find another source/trace original, "The 'Sift' Strategy: A Four-Step Method For Spotting Misinformation" Amanda Ruggeri "BBC", "wikiHow.com" getting a human on the line, AAAS "Science" A.I. guardrails, author's A:accommodation (for identifying A.I. robots).Shortlink

https://wp.me/p2ETap-kTrNavigation

Categories

Recent Posts

Archives

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017

- April 2017

- March 2017

- February 2017

- January 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- September 2013

- August 2013

- July 2013

- June 2013

- May 2013

- April 2013

- March 2013

- February 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- August 2012

Dennis, have you heard the term “sludging?” It describes the frustrating rigamarole that some companys’ “customer service” contacts use to avoid handling customer issues. Help lines are not always helpful. They are often designed to make the customer so mad that they just give up, hang up, or “end the chat” and go away. For the business, that’s problem solved.

Ha. New to me but not surprising. —ds