Simanaitis Says

On cars, old, new and future; science & technology; vintage airplanes, computer flight simulation of them; Sherlockiana; our English language; travel; and other stuff

A.I. HALLUCINATIONS ON THE RISE

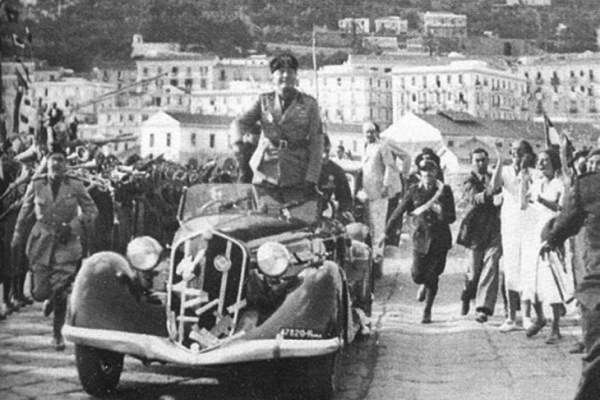

I KINDA SAW THIS COMING with Google searches occasionally offering corrupted versions of SimanaitisSays comments: “Dennis Simanaitis says Benito Mussolini and Ercole Boratto won the 1936 Mille Miglia,” or some such garbling of Large Language Model data scraping.

Benito Mussolini, 1883–1945, Italian Fascist dictator, Il Duce, standing; Ercole Boratto, 1886–1979, Italian race driver, Mussolini chauffeur and “confidente.” The car is Mussolini’s 1935 Alfa Romeo 6C 2300 Sport Spyder Pescara, Boratto’s Mille Miglia drive in 1936. Image from Kidston: Keep It Alive via SimanaitisSays.

More Power, More Hallucinations. Indeed, just recently Cade Metz and Karen Weise report “A.I. Is Getting More Powerful, But Its Hallucinations Are Getting Worse,”The New York Times, May 5, 2025.

Metz and Weise recount, “More than two years after the arrival of ChatGPT, tech companies, office workers and everyday consumers are using A.I. bots for an increasingly wide array of tasks. But there is still no way of ensuring that these systems produce accurate information. The newest and most powerful technologies — so-called reasoning systems from companies like OpenAI, Google and the Chinese start-up DeepSeek — are generating more errors, not fewer. As their math skills have notably improved, their handle on facts has gotten shakier. It is not entirely clear why.”

Large Language Models. “Today’s A.I. bots,” Metz and Weise describe, “are based on complex mathematical systems that learn their skills by analyzing enormous amounts of digital data. They do not—and cannot—decide what is true and what is false. Sometimes, they just make stuff up, a phenomenon some A.I. researchers call hallucinations. On one test, the hallucination rates of newer A.I. systems were as high as 79 percent.”

Image by Eric Carter for The New York Times.

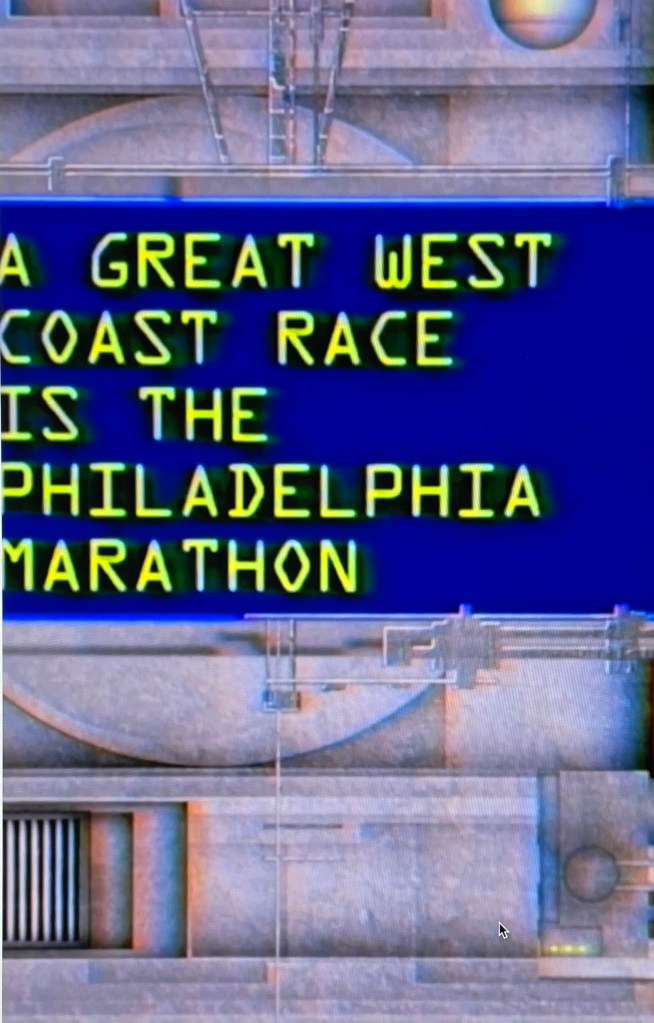

The Times researchers cite, “The A.I. bots tied to search engines like Google and Bing sometimes generate search results that are laughably wrong. If you ask them for a good marathon on the West Coast, they might suggest a race in Philadelphia. If they tell you the number of households in Illinois, they might cite a source that does not include that information.”

Image by Pablo DelCan for The New Times via “A.I. GIGO.”

I recall an attorney’s A.I.-generated legalese citing court cases that didn’t exist. And there are the occasional misquotes of SimanaitisSays.

Metz and Weise observe, “Those hallucinations may not be a big problem for many people, but it is a serious issue for anyone using the technology with court documents, medical information or sensitive business data.”

Can A.I. Ever Develop Honesty? Metz and Weise quote a specialist: “ ‘Despite our best efforts, they will always hallucinate,’ said Amr Awadallah, the chief executive of Vectara, a start-up that builds A.I. tools for businesses, and a former Google executive. ‘That will never go away.’ ”

“For more than two years,” The Times researchers observe, “companies like OpenAI and Google steadily improved their A.I. systems and reduced the frequency of these errors. But with the use of new reasoning systems, errors are rising. The latest OpenAI systems hallucinate at a higher rate than the company’s previous system, according to the company’s own tests.”

The Times researchers recount, “The company found that o3—its most powerful system—hallucinated 33 percent of the time when running its PersonQA benchmark test, which involves answering questions about public figures. That is more than twice the hallucination rate of OpenAI’s previous reasoning system, called o1. The new o4-mini hallucinated at an even higher rate: 48 percent.”

They continue, “When running another test called SimpleQA, which asks more general questions, the hallucination rates for o3 and o4-mini were 51 percent and 79 percent. The previous system, o1, hallucinated 44 percent of the time.”

Who’s At Fault? “In a paper detailing the tests,” Metz and Weise relate, “OpenAI said more research was needed to understand the cause of these results. Because A.I. systems learn from more data than people can wrap their heads around, technologists struggle to determine why they behave in the ways they do.”

Metz and Weise continue, “Hannaneh Hajishirzi, a professor at the University of Washington and a researcher with the Allen Institute for Artificial Intelligence, is part of a team that recently devised a way of tracing a system’s behavior back to the individual pieces of data it was trained on. But because systems learn from so much data—and because they can generate almost anything—this new tool can’t explain everything. ‘We still don’t know how these models work exactly,’ she said.”

It’s kinda like giving A.I. an “open-book test”—without saying which books are allowed and anything about the books’ veracity.

Reinforcement Learning. “So,” The Times researchers write, “these companies are leaning more heavily on a technique that scientists call reinforcement learning. With this process, a system can learn behavior through trial and error. It is working well in certain areas, like math and computer programming. But it is falling short in other areas.”

“ ‘What the system says it is thinking is not necessarily what it is thinking,’ said Aryo Pradipta Gema, an A.I. researcher at the University of Edinburgh and a fellow at Anthropic.”

Geez. Like a lazy C- student. ds

© Dennis Simanaitis, SimanaitisSays.com, 2025

Related

4 comments on “A.I. HALLUCINATIONS ON THE RISE”

Leave a comment Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed.

Information

This entry was posted on May 9, 2025 by simanaitissays in Sci-Tech and tagged "A.I. Is Getting More Powerful But Its Hallucinations Are Getting Worse" Cade Metz and Karen Weise "The New York Times", A.I. "reinforcement learning": trial and error, A.I. hallucination: "Great West Coast Race The Philadelphia Marathon", more powerful A.I. produce more hallucinations, occasional garbled A.I. citation of "SimanaitisSays", OPENAI examples of power rise accompanying by error rise.Shortlink

https://wp.me/p2ETap-jymCategories

Recent Posts

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017

- April 2017

- March 2017

- February 2017

- January 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- September 2013

- August 2013

- July 2013

- June 2013

- May 2013

- April 2013

- March 2013

- February 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- August 2012

And then these hallucinations become part of the mass of data that LLMs use and reinforce the mess.

Clearly, the answer to this problem is forty two.

Or maybe Thursday.

Let me ask my man Friday…