Simanaitis Says

On cars, old, new and future; science & technology; vintage airplanes, computer flight simulation of them; Sherlockiana; our English language; travel; and other stuff

MORE A.I. BS

QUITE INDEPENDENT OF RECENT BUSINESS MACHINATIONS in the A.I. industry, there still remains the inherent problem of addressing hallucinations. SimanaitisSays discussed this in “On Chatbots and Other Hallucinators.” And in The New York Times, November 6, updated 16, 2023, Cade Metz writes, “Chatbots May ‘Hallucinate’ More Often Than Many Realize.”

A.I. Firing on Less Than All Cylinders. Metz recounts, “When the San Francisco start-up OpenAI unveiled its ChatGPT online chatbot late last year, millions were wowed by the humanlike way it answered questions, wrote poetry and discussed almost any topic. But most people were slow to realize that this new kind of chatbot often makes things up.”

He adds to the ChatGPT fake court cases cited in SimanaitisSays with other bizarre hallucinations: “When Google introduced a similar chatbot several weeks later, it spewed nonsense about the James Webb telescope. The next day, Microsoft’s new Bing chatbot offered up all sorts of bogus information about the Gap, Mexican nightlife and the singer Billie Eilish.”

If A.I. is So Smart, How Come It Makes Up Stuff? An inherent part of Large Language Model technology is its amassing data from whatever sources it’s fed, then using complex algorithms to identify patterns. As Metz notes, “By pinpointing patterns in all that data, an L.L.M. learns to do one thing in particular: guess the next word in a sequence of words.“

“Because,” Metz notes, “the internet is filled with untruthful information, these systems repeat the same untruths. They also rely on probabilities: What is the mathematical chance that the next word is ‘playwright’? From time to time, they guess incorrectly.”

As suggested long ago with the Infinite Monkeys/Infinite Typewriters analogy: “Was this the face that ____” can be followed by a lot more than “launched a thousand ships.”

If it’s a Chatbot parlour game (ha; now there’s an odd pairing of the new and old), hallucinations are no big deal. But if it’s a medical diagnosis…..

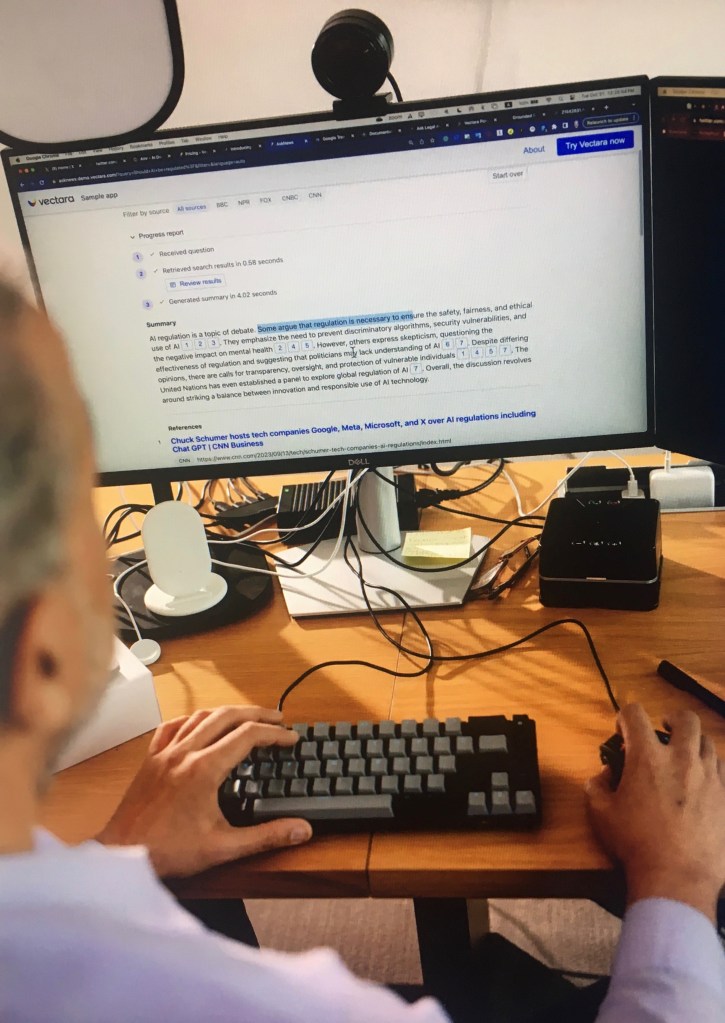

Challenging the Hallucinators. Metz says, “ Now a new start-up called Vectara, founded by former Google employees, is trying to figure out how often chatbots veer from the truth. The company’s research estimates that even in situations designed to prevent it from happening, chatbots invent information at least 3 percent of the time — and as high as 27 percent.”

Even 3 percent would appear unacceptable in engineering, medical, or legal concepts. But more than quarter of the time is rubbish, not “intelligence.”

Wheat or Chaff. Nor is separating A.I. wheat from chaff a non-trivial process. Metz quotes a Vectara researcher: “Because these chatbots can respond to almost any request in an unlimited number of ways, there is no way of definitively determining how often they hallucinate. ‘You would have to look at all of the world’s information,’ said Simon Hughes, the Vectara researcher who led the project.”

With one approach, researchers gave A.I.s 10 to 20 facts and asked for a summary. “Even then,” Metz notes, “the chatbots persistently invented information…. The researchers argue that when these chatbots perform other tasks—beyond mere summarization—hallucination rates may be higher.”

Metz cites, “Their research also showed that hallucination rates vary widely among the leading A.I. companies. OpenAI’s technologies had the lowest rate, around 3 percent. Systems from Meta, which owns Facebook and Instagram, hovered around 5 percent. The Claude 2 system offered by Anthropic, an OpenAI rival also based in San Francisco, topped 8 percent. A Google system, Palm chat, had the highest rate at 27 percent.”

A.I.’s Inherent Shortcoming (and Humanity’s Advantage). Of course, the chatbot isn’t just a nervous kid making stuff up when urged. Metz notes, “Because chatbots learn from patterns in data and operate according to probabilities, they behave in unwanted ways at least some of the time.”

And so it is with humans, with one important difference: We too analyze data to draw probabilistic conclusions. But sage reasoners do so without the hubris of searching all available data: They select the most reliable data sets, and thus reach less hallucinatory conclusions.

Otherwise, it’s that old computer saw: GIGO. “Garbage in, garbage out.” ds

© Dennis Simanaitis, SimanaitisSays.com, 2023