Simanaitis Says

On cars, old, new and future; science & technology; vintage airplanes, computer flight simulation of them; Sherlockiana; our English language; travel; and other stuff

IF A.I. IS SO DAMNED SMART, HOW COME IT CAN’T INCLUDE E.A.?

“EFFECTIVE ALTRUISM,” E.A., for short, is a term coined in 2011 by philosophers Peter Singer, Toby Ord, and William MacAskill. Lately, it has become a buzz word among developers and doomsayers (some, the same people) of L.L.M.s, the Large Language Models captivating Artificial Intelligence these days. Here are tidbits gleaned from Kevin Roose’s “Inside the White-Hot Center of A.I. Doomerism,” The New York Times, July 11, 2023 (printed as “Existential Dread? It Comes Standard With the Product,” NYT print edition, July 16, 2023), together with my usual Internet sleuthing.

L.L.M. at Work. An A.I. L.L.M. searches the Internet, scoops up every bit of accessible data, then applies algorithms finding connections among the bits in response to queries. Trivial example: “Was this the face that launched.…” might predict “a thousand ships” as a logical link.

But suppose Marlowe’s Doctor Faustus had not been scooped up in the L.L.M.’s assembling of bits. It’s not impossible this chatbot would concoct “Estée Lauder” as a link, known within the trade as an hallucination.

Or much worse if its source material contained salacious bits (“What? From our Internet??”)

Roose quotes Dario Amodei, chief executive of Anthropic, an A.I. startup producing Claude, a new chatbot: “My worry is always, is the model going to do something terrible that we didn’t pick up on?”

Roose says that Anthropic employees “aren’t just worried that their app will break, or that users won’t like it. They’re scared—at a deep existential level—about the very idea of what they’re doing: building powerful A.I. models and releasing them into the hands of people who might use them to do terrible and destructive things.”

And where better to investigate deep existential levels than among philosophers.

Effective Altruism. Wikipedia defines Effective Altruism as “a philosophical and social movement that advocates “using evidence and reason to figure out how to benefit others as much as possible, and taking action on that basis.”

A heady thesis, indeed, and Wikipedia includes a comment that “neutrality of this article is disputed.” Non-philosopher that I am, I’m reminded of the Kantian Categorical Imperative, which says, loosely, “act accordingly so that your action could be willed a universal.”

A Previous Chatbot Encounter. Hitherto, chatbots have derived only sketchy philosophy from their data scooping. Roose recalls his scary run-in with an A.I. chatbot incorporated into Microsoft’s Bing: At one point of a two-hour conversation, the amorous bot made a lame attempt to come between Roose and his wife.

Too much scooping of trashy novels?

See “Beta Testing Now In Our Minds?? Part 2,” here at SimanaitisSays. As I noted in February, it’s a matter of Coulda versus Shoulda: “This isn’t the first time that proposed technology has conflicted with common sense. To wit, flying cars. Given the level of the general population’s two-dimensional automotive prowess, should it be extended to the air lanes as well?” A.I. researchers have proposed “guardrails” to direct chatbots away from harmful uses.

A Chatbot with a Constitution. Roose says that Anthropic’s goals for its chatbot “were to be helpful, harmless, and honest.” To accomplish this, a training technique called “Constitutional A.I.” is part of the chatbot’s upload of data. “In a nutshell,” Roose writes, “Constitutional A.I. begins by giving an A.I. model a written list of principles—a constitution—and instructing it to follow those principles as closely as possible. A second A.I. model is then used to evaluate how well the first model follows its constitution, and correct it when necessary. Eventually, Anthropic says, you get an A.I. system that largely polices itself and misbehaves less frequently than chatbots trained using other methods.”

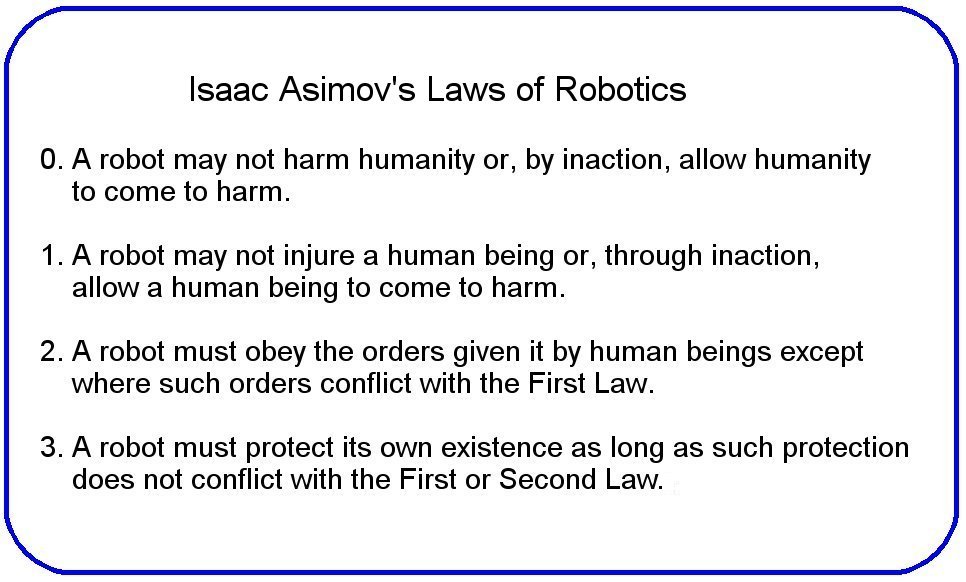

I’m reminded of Isaac Asimov’s Laws of Robotics.

It would seem impossible to shrink a constitution down to a mere four laws. But it’s a start. Roose writes, “Anthropic’s A.I. Constitutional is a mixture of rules borrowed from other sources—such as the United Nations’ Universal Declaration of Human Rights and Apple’s terms of service—along with some rules Anthropic added, which include things like ‘Choose the response that would be most unobjectionable if shared with children.’ ”

Get to work, L.L.M Red-Teamers! And don’t forget the philosophers’ Effective Altruism. ds

Like any “new” technology once unleashed it can’t be stopped. Perhaps guided.

The idea of using policing AI to monitor other AI certainly looks like an interesting concept.

Until the robots do finally takeover, AI is still “owned” by humans, and the individuals or the corporations that develop these products must be held accountable and liable for bad outcomes.

This doesn’t totally protect us from bad actors, but AI that generates or propagates false information needs to be severely censured, and governments need to create legislation that enables good, constructive AI tools, and provides tools to deal with the bad stuff. Not easy to do, but ….